Дескриптор BRIEF

BRIEF (Binary Robust Independent Elementary Features, двоичные устойчивые независимые элементарные признаки) — это эффективный дескриптор точек признаков. Он обладает высокой дискриминационной способностью даже при использовании относительно небольшого количества бит и вычисляется с помощью простых тестов на разницу интенсивности. BRIEF не имеет шаблона выборки, поэтому пары можно выбирать в любой точке участка SxS.

Для построения дескриптора BRIEF длиной n необходимо определить n пар (Xi,Yi). Обозначим векторы точек Xi и Yi соответственно X и Y.

В ImageFeatures.jl есть пять методов для определения векторов X и Y:

-

random_uniform:XиYвыбираются равномерно случайным образом. -

gaussian:XиYвыбираются случайным образом с использованием гауссова распределения; это означает, что предпочтение отдается местоположениям ближе к центру участка. -

gaussian_local:XиYвыбираются случайным образом с использованием гауссова распределения, причем сначала выбираетсяXсо среднеквадратичным отклонением0.04*S^2, а затем выбираютсяYi’sс использованием гауссова распределения — каждая точкаYiвыбирается со средним значениемXiи среднеквадратичным отклонением0.01 * S^2. -

random_coarse:XиYвыбираются случайным образом из дискретного местоположения на грубой полярной сетке. -

center_sample: для каждогоiXiравно(0, 0), аYiпринимает все возможные значения на грубой полярной сетке.

Как и в случае с другими двоичными дескрипторами, мерой расстояния BRIEF является количество разных бит между двумя двоичными строками. Его также можно вычислить как сумму операции XOR между строками.

BRIEF — очень простой дескриптор признаков, не обеспечивающий инвариантности к масштабу или повороту (только инвариантность к сдвигу). Если вам нужны эти свойства, см. примеры дескрипторов ORB, BRISK и FREAK.

Пример

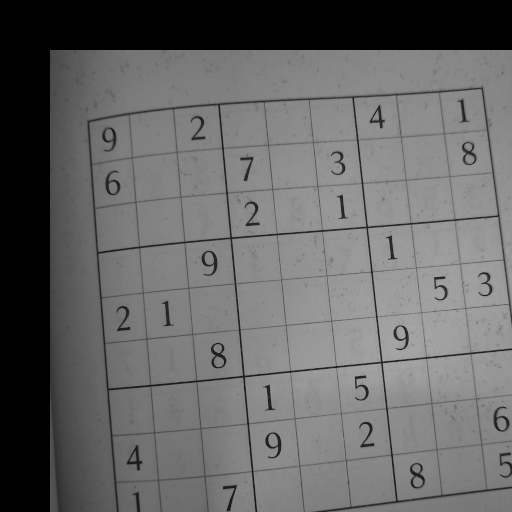

Давайте рассмотрим простой пример, в котором дескриптор BRIEF используется для сопоставления двух изображений, одно из которых было смещено на (100, 200) пикселей. В этом примере используется изображение lighthouse из пакета TestImages.

Теперь создадим два изображения, которые будут сопоставляться с помощью BRIEF.

using ImageFeatures, TestImages, Images, ImageDraw, CoordinateTransformations

img = testimage("sudoku")

img1 = Gray.(img)

trans = Translation(-50, -50)

img2 = warp(img1, trans, axes(img1))

Для вычисления дескрипторов сначала нужно получить ключевые точки. В этом руководстве мы будем использовать углы FAST для генерирования ключевых точек (см. описание функции fastcorners).

keypoints_1 = Keypoints(fastcorners(img1, 12, 0.4))

keypoints_2 = Keypoints(fastcorners(img2, 12, 0.4))123-element Vector{CartesianIndex{2}}:

CartesianIndex(254, 214)

CartesianIndex(203, 254)

CartesianIndex(439, 267)

CartesianIndex(444, 267)

CartesianIndex(437, 268)

CartesianIndex(445, 268)

CartesianIndex(436, 269)

CartesianIndex(434, 271)

CartesianIndex(448, 271)

CartesianIndex(436, 277)

⋮

CartesianIndex(295, 489)

CartesianIndex(279, 490)

CartesianIndex(294, 490)

CartesianIndex(287, 491)

CartesianIndex(293, 491)

CartesianIndex(429, 498)

CartesianIndex(430, 499)

CartesianIndex(431, 500)

CartesianIndex(408, 505)Чтобы создать дескриптор BRIEF, сначала нужно определить параметры, вызвав конструктор BRIEF.

brief_params = BRIEF(size = 256, window = 10, seed = 123)BRIEF{typeof(gaussian)}(256, 10, 1.4142135623730951, ImageFeatures.gaussian, 123)Теперь передайте изображение с ключевыми точками и параметрами в функцию create_descriptor.

desc_1, ret_keypoints_1 = create_descriptor(img1, keypoints_1, brief_params)

desc_2, ret_keypoints_2 = create_descriptor(img2, keypoints_2, brief_params)(BitVector[[1, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 0, 1, 1, 0, 0, 1, 1, 0, 1, 1], [1, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 0, 0, 1, 1, 1, 1, 1, 0, 1, 0], [1, 1, 0, 1, 1, 1, 1, 1, 1, 0 … 0, 1, 1, 0, 1, 1, 1, 0, 1, 1], [0, 0, 1, 0, 1, 1, 1, 1, 1, 0 … 0, 1, 1, 0, 1, 1, 0, 0, 1, 0], [0, 0, 0, 1, 1, 1, 1, 1, 1, 0 … 0, 1, 1, 0, 1, 1, 1, 0, 1, 1], [0, 0, 1, 0, 1, 0, 1, 1, 0, 0 … 0, 1, 1, 0, 0, 1, 0, 0, 1, 0], [0, 0, 0, 1, 1, 1, 1, 1, 1, 0 … 0, 1, 1, 0, 1, 1, 1, 0, 1, 1], [0, 0, 0, 1, 0, 1, 1, 1, 1, 1 … 0, 0, 1, 0, 1, 1, 1, 0, 1, 0], [0, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 1, 0, 1, 0, 0, 1, 0, 1, 1, 0], [0, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 0, 1, 1, 0, 1, 1, 0, 0, 1, 0] … [0, 0, 0, 1, 0, 1, 1, 0, 1, 1 … 1, 1, 1, 0, 1, 1, 1, 0, 1, 0], [0, 1, 0, 0, 1, 1, 0, 1, 1, 1 … 1, 1, 1, 0, 1, 1, 1, 0, 1, 0], [0, 0, 1, 0, 1, 0, 1, 1, 0, 0 … 1, 1, 1, 1, 0, 1, 0, 0, 0, 1], [0, 1, 0, 0, 1, 1, 1, 1, 1, 1 … 0, 1, 0, 1, 1, 1, 1, 0, 1, 1], [1, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 0, 1, 1, 0, 0, 1, 0, 0, 1, 1], [0, 0, 0, 0, 1, 1, 1, 1, 0, 1 … 0, 1, 0, 1, 1, 1, 1, 0, 0, 1], [0, 0, 1, 0, 1, 0, 1, 1, 0, 0 … 0, 1, 1, 0, 0, 1, 0, 0, 1, 0], [0, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 0, 0, 1, 0, 0, 1, 0, 1, 1, 0], [0, 0, 0, 1, 0, 0, 1, 1, 1, 0 … 1, 0, 1, 0, 0, 1, 0, 1, 1, 0], [0, 0, 0, 1, 0, 0, 0, 1, 1, 1 … 1, 1, 1, 1, 1, 1, 1, 0, 0, 0]], CartesianIndex{2}[CartesianIndex(254, 214), CartesianIndex(203, 254), CartesianIndex(439, 267), CartesianIndex(444, 267), CartesianIndex(437, 268), CartesianIndex(445, 268), CartesianIndex(436, 269), CartesianIndex(434, 271), CartesianIndex(448, 271), CartesianIndex(436, 277) … CartesianIndex(296, 487), CartesianIndex(295, 489), CartesianIndex(279, 490), CartesianIndex(294, 490), CartesianIndex(287, 491), CartesianIndex(293, 491), CartesianIndex(429, 498), CartesianIndex(430, 499), CartesianIndex(431, 500), CartesianIndex(408, 505)])Полученные дескрипторы можно использовать для поиска соответствий между двумя изображениями с помощью функции match_keypoints.

matches = match_keypoints(ret_keypoints_1, ret_keypoints_2, desc_1, desc_2, 0.1)123-element Vector{Vector{CartesianIndex{2}}}:

[CartesianIndex(204, 164), CartesianIndex(254, 214)]

[CartesianIndex(153, 204), CartesianIndex(203, 254)]

[CartesianIndex(389, 217), CartesianIndex(439, 267)]

[CartesianIndex(394, 217), CartesianIndex(444, 267)]

[CartesianIndex(387, 218), CartesianIndex(437, 268)]

[CartesianIndex(395, 218), CartesianIndex(445, 268)]

[CartesianIndex(386, 219), CartesianIndex(436, 269)]

[CartesianIndex(384, 221), CartesianIndex(434, 271)]

[CartesianIndex(398, 221), CartesianIndex(448, 271)]

[CartesianIndex(386, 227), CartesianIndex(436, 277)]

⋮

[CartesianIndex(245, 439), CartesianIndex(295, 489)]

[CartesianIndex(229, 440), CartesianIndex(279, 490)]

[CartesianIndex(244, 440), CartesianIndex(294, 490)]

[CartesianIndex(237, 441), CartesianIndex(287, 491)]

[CartesianIndex(243, 441), CartesianIndex(293, 491)]

[CartesianIndex(379, 448), CartesianIndex(429, 498)]

[CartesianIndex(380, 449), CartesianIndex(430, 499)]

[CartesianIndex(381, 450), CartesianIndex(431, 500)]

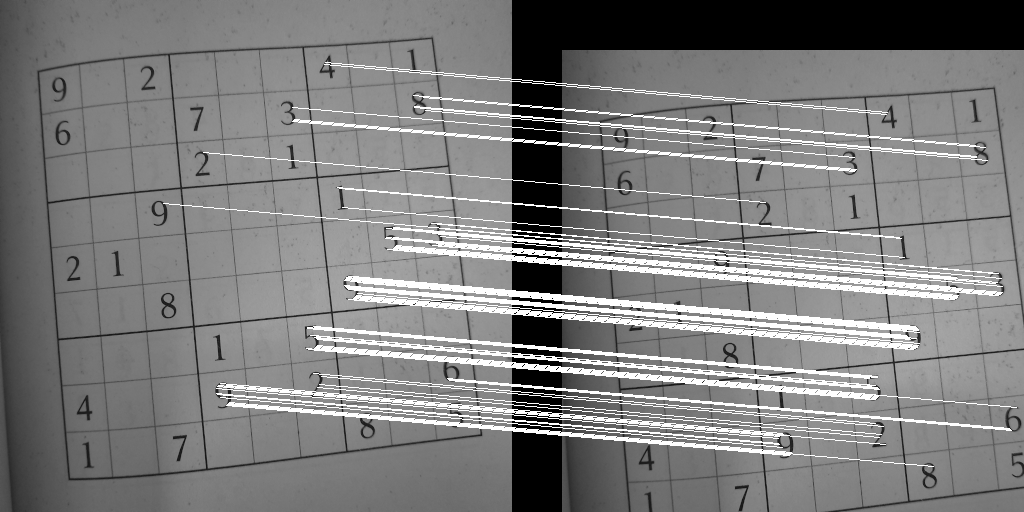

[CartesianIndex(358, 455), CartesianIndex(408, 505)]Просмотреть результаты можно с помощью пакета ImageDraw.jl.

grid = hcat(img1, img2)

offset = CartesianIndex(0, size(img1, 2))

map(m -> draw!(grid, LineSegment(m[2] + offset,m[1] )), matches)

grid

grid выводит результаты

Эта страница была создана с помощью DemoCards.jl и Literate.jl.