Дескриптор ORB

Дескриптор ORB (Oriented Fast and Rotated Brief, направленный FAST и повернутый BRIEF) несколько похож на BRIEF. У него нет специального шаблона выборки, как у BRISK или FREAK.

Однако между ORB и BRIEF есть два основных различия:

-

ORB использует механизм компенсации ориентации, что делает его инвариантным к повороту.

-

ORB изучает оптимальные пары выборки, тогда как BRIEF использует случайно выбранные пары.

В качестве меры ориентации дескриптор ORB использует центроид интенсивности. Чтобы вычислить этот центроид, сначала нужно найти момент участка, который определяется как Mpq = x,yxpyqI(x,y). Тогда центроид, или «центр масс», определяется как C=(M10M00, M01M00).

Вектор из центра угла к центроиду задает ориентацию участка. Теперь перед вычислением дескриптора участок можно повернуть в некоторую предварительно определенную каноническую ориентацию, чтобы обеспечить инвариантность к повороту.

Алгоритм ORB пытается выбрать некоррелированные пары выборки, чтобы каждая новая пара привносила новую информацию в дескриптор и он в итоге нес в себе максимальный объем информации. Также желательно, чтобы между парами была высокая дисперсия, чтобы признак был более дискриминативным, так как он по-разному реагирует на входные данные. Для этого рассматриваются пары выборки по ключевым точкам в стандартных наборах данных, а затем выполняется жадная оценка всех пар в порядке удаления от среднего значения до тех пор, пока не будет получено требуемое количество пар, то есть размер дескриптора.

Дескриптор строится с использованием сравнений интенсивности пар. Для каждой пары, если первая точка имеет большую интенсивность, чем вторая, то в соответствующий бит дескриптора записывается значение 1; в противном случае записывается 0.

Пример

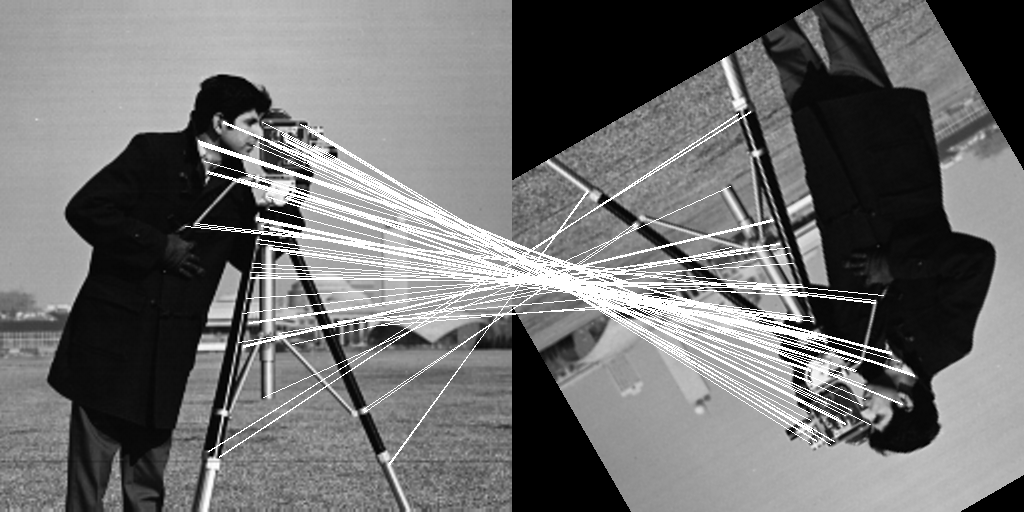

Давайте рассмотрим простой пример, в котором дескриптор ORB используется для сопоставления двух изображений, одно из которых было смещено на (50, 40) пикселей, а затем повернуто на угол 75 градусов. В этом примере используется изображение cameraman из пакета TestImages.

Сначала создадим два изображения, которые будут сопоставляться с помощью ORB.

using ImageFeatures, TestImages, Images, ImageDraw, CoordinateTransformations, Rotations

img = testimage("cameraman")

img1 = Gray.(img)

rot = recenter(RotMatrix(5pi/6), [size(img1)...] .÷ 2) # поворот вокруг центра

tform = rot ∘ Translation(-50, -40)

img2 = warp(img1, tform, axes(img1))

Дескриптор ORB, в отличие от BRIEF, вычисляет как ключевые точки, так и дескриптор. Чтобы создать дескриптор ORB, сначала нужно определить параметры, вызвав конструктор ORB.

orb_params = ORB(num_keypoints = 1000)ORB(1000, 12, 0.25, 0.04, 1.3, 8, 1.2)Теперь передайте изображение с параметрами в функцию create_descriptor.

desc_1, ret_keypoints_1 = create_descriptor(img1, orb_params)

desc_2, ret_keypoints_2 = create_descriptor(img2, orb_params)(BitVector[[0, 0, 0, 0, 1, 1, 0, 0, 0, 0 … 1, 0, 1, 0, 0, 0, 1, 1, 0, 1], [0, 1, 0, 0, 1, 1, 0, 0, 0, 1 … 1, 0, 1, 1, 0, 0, 1, 1, 0, 1], [0, 1, 1, 0, 0, 1, 1, 0, 0, 0 … 1, 1, 0, 1, 1, 0, 0, 1, 1, 0], [0, 0, 1, 1, 1, 1, 1, 0, 0, 0 … 1, 1, 0, 1, 0, 0, 1, 0, 0, 0], [0, 0, 0, 0, 0, 1, 0, 0, 0, 1 … 1, 0, 1, 1, 0, 0, 1, 1, 0, 1], [1, 0, 1, 1, 0, 0, 1, 0, 1, 1 … 1, 1, 1, 0, 0, 0, 0, 1, 1, 1], [0, 1, 1, 0, 1, 1, 1, 0, 0, 0 … 1, 0, 0, 1, 1, 0, 0, 1, 1, 0], [0, 0, 1, 1, 1, 1, 1, 0, 0, 0 … 1, 0, 0, 1, 0, 1, 1, 0, 0, 1], [0, 1, 0, 0, 1, 0, 1, 0, 1, 0 … 0, 0, 0, 0, 1, 0, 0, 0, 1, 0], [0, 1, 0, 1, 0, 0, 1, 0, 0, 0 … 0, 0, 1, 1, 0, 1, 1, 0, 1, 0] … [0, 0, 0, 0, 0, 0, 1, 0, 1, 1 … 1, 1, 1, 0, 0, 1, 1, 1, 1, 1], [0, 1, 1, 1, 0, 1, 1, 0, 1, 0 … 1, 0, 0, 1, 0, 1, 0, 1, 1, 1], [0, 1, 1, 0, 1, 1, 1, 1, 1, 0 … 0, 0, 1, 1, 0, 0, 0, 1, 0, 0], [0, 1, 1, 1, 0, 0, 0, 1, 0, 1 … 0, 1, 1, 1, 1, 0, 0, 0, 1, 0], [0, 1, 0, 0, 0, 0, 0, 1, 1, 0 … 1, 1, 1, 1, 0, 1, 1, 0, 1, 1], [0, 1, 1, 0, 0, 0, 1, 1, 1, 0 … 0, 1, 1, 0, 0, 0, 0, 0, 0, 1], [0, 0, 1, 1, 1, 1, 1, 0, 0, 0 … 1, 1, 0, 1, 0, 0, 0, 1, 0, 0], [1, 1, 0, 0, 0, 1, 1, 0, 1, 0 … 0, 1, 0, 0, 0, 1, 1, 0, 0, 1], [1, 0, 0, 1, 1, 0, 0, 0, 0, 1 … 0, 0, 1, 0, 1, 1, 0, 0, 0, 1], [1, 1, 0, 0, 1, 0, 0, 1, 1, 1 … 0, 0, 1, 1, 1, 1, 1, 0, 0, 0]], CartesianIndex{2}[CartesianIndex(160, 37), CartesianIndex(161, 37), CartesianIndex(336, 296), CartesianIndex(382, 299), CartesianIndex(161, 38), CartesianIndex(158, 242), CartesianIndex(336, 295), CartesianIndex(382, 300), CartesianIndex(150, 241), CartesianIndex(196, 298) … CartesianIndex(122, 235), CartesianIndex(248, 273), CartesianIndex(227, 162), CartesianIndex(365, 347), CartesianIndex(234, 184), CartesianIndex(263, 265), CartesianIndex(258, 262), CartesianIndex(164, 247), CartesianIndex(277, 283), CartesianIndex(275, 287)], [1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0 … 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0])Полученные дескрипторы можно использовать для поиска соответствий между двумя изображениями с помощью функции match_keypoints.

matches = match_keypoints(ret_keypoints_1, ret_keypoints_2, desc_1, desc_2, 0.2)122-element Vector{Vector{CartesianIndex{2}}}:

[CartesianIndex(230, 271), CartesianIndex(337, 296)]

[CartesianIndex(189, 291), CartesianIndex(382, 300)]

[CartesianIndex(230, 272), CartesianIndex(337, 295)]

[CartesianIndex(146, 315), CartesianIndex(432, 301)]

[CartesianIndex(147, 314), CartesianIndex(431, 301)]

[CartesianIndex(230, 275), CartesianIndex(338, 294)]

[CartesianIndex(172, 209), CartesianIndex(355, 380)]

[CartesianIndex(199, 287), CartesianIndex(371, 299)]

[CartesianIndex(229, 271), CartesianIndex(338, 296)]

[CartesianIndex(199, 286), CartesianIndex(370, 299)]

⋮

[CartesianIndex(136, 283), CartesianIndex(425, 333)]

[CartesianIndex(343, 239), CartesianIndex(254, 279)]

[CartesianIndex(145, 201), CartesianIndex(374, 400)]

[CartesianIndex(182, 236), CartesianIndex(360, 351)]

[CartesianIndex(179, 240), CartesianIndex(364, 348)]

[CartesianIndex(240, 259), CartesianIndex(322, 302)]

[CartesianIndex(162, 289), CartesianIndex(404, 315)]

[CartesianIndex(180, 239), CartesianIndex(363, 349)]

[CartesianIndex(278, 251), CartesianIndex(287, 291)]Просмотреть результаты можно с помощью пакета ImageDraw.jl.

grid = hcat(img1, img2)

offset = CartesianIndex(0, size(img1, 2))

map(m -> draw!(grid, LineSegment(m[1], m[2] + offset)), matches)

grid

Эта страница была создана с помощью DemoCards.jl и Literate.jl.