Optimization

In this example, we will demonstrate the solution of several simple optimization problems: finding the minimum of a two-parameter function using a package Optim, an explicit implementation of gradient descent and the search for an optimal solution with constraints in a linear programming problem.

Let's make a preparatory cell for loading libraries.

Pkg.add(["GLPK", "LinearAlgebra", "StatsBase", "JuMP", "Optim"])

# Download the necessary libraries

using Plots

plotlyjs()

Optimization of the function of several variables

http://julianlsolvers.github.io/Optim.jl/v0.9.3/user/minimization/

Find the minimum of the function using the library Optim.

Specify the starting point .

using Optim

f_optim(x) = (1.0 - x[1])^2 + 100.0 * (x[2] - x[1]^2)^2

results_auto = Optim.optimize(f_optim, [0.0, 0.0])

print(results_auto)

Implementing gradient descent

https://nextjournal.com/DeepLearningNotes/Ch06StochasticGradientDescent

The objective of this exercise is to implement a linear regression procedure through gradient descent. First, run the preparation cell.

using Plots, LinearAlgebra, StatsBase

The input data for this task is created in the following cell.

regX = rand(100)

regY = 50 .+ 100 * regX + 2 * randn(100);

scatter( regX, regY, fmt = :png, legend=:bottomright, label="data" )

The exact solution to this problem can be expressed as follows.

ϕ = hcat(regX.^0, regX.^1);

δ = 0.01;

θ = inv(ϕ'ϕ + δ^2*I)*ϕ'regY;

Let's define a quadratic loss function and calculate the gradient. After this function, we can draw a graph of the optimization function, but we will draw it at the very end of the exercise.

function loss(X,y,θ)

cost = (y .- X*θ)'*(y .- X*θ);

grad = - 2 * X'y + 2*X'X*θ;

return (cost, grad)

end

function sgd(loss, X, y, θ_start, η, n, num_iters)

#=

List of arguments:

loss is a function that we optimize.,

it accepts dataset (X, y) and

the current set of parameters;

the function should return

loss value and gradient when setting parameters

theta0 -- initial estimate of the parameter vector θ

nn -- learning rate

n -- batch size

num_iters -- max.number of SGD runs

Returns:

theta -- vector of parameters at the end of SGD

path -- estimates of the optimal θ at each learning step

=#

data = [(X[i,:],y[i]) for i in 1:length(y)]

θ = θ_start

path = θ

for iter in 1:num_iters

s = StatsBase.sample(data, n)

Xs = hcat(first.(s)...)'

ys = last.(s)

cost, grad = loss(Xs,ys,θ)

θ = θ .- ( ( η * num_iters/(num_iters+iter) * grad) / n )

path = hcat(path,θ)

end

return (θ,path)

end

Graph output with optimization progress:

# The surface of the loss function

xr = range(40, stop=60, length=10);

yr = range(90, stop=110, length=10);

X = hcat(ones(length(regX)), regX);

f(x,y) = loss(X,regY,[x y]')[1][1];

plot(xr, yr, f, st=:wireframe, fmt = :png, label="Loss function", color="blue")

# Optimization paths

X = hcat(ones(length(regX)), regX);

y = regY;

(t,p) = sgd(loss, X, y, [40 90]', 0.1, 1, 500);

scatter!( p[1,:], p[2,:], f.(p[1,:],p[2,:]),

label="The optimization path", ms=3, markerstrokecolor = "white", camera=(40,30) )

Let's display the optimization results:

println("Coordinates of the found point where the function takes the minimum value:")

print( p[:,end] )

Finding the optimal solution with constraints

Suppose we are faced with such an optimization problem:

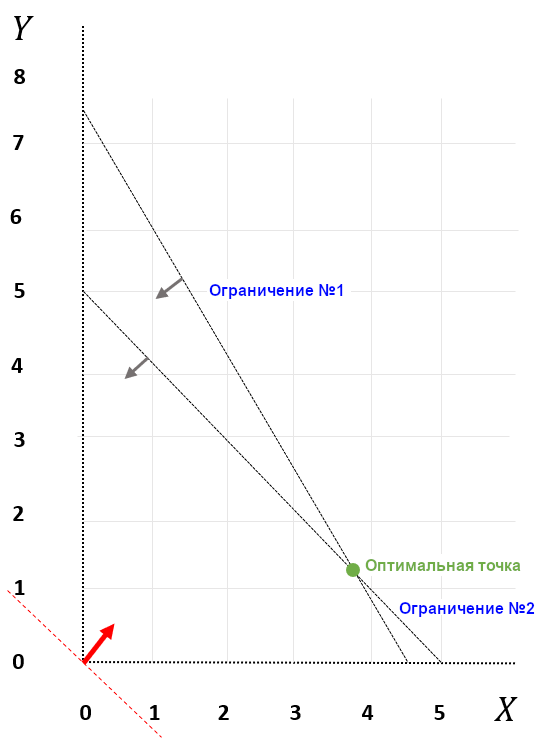

The range of acceptable values is shown in the figure (x and y are continuous, independent variables):

We are given an optimization objective (red arrow) and a direction (maximization), the green dot is the optimal solution, its coordinates are x = 3.75 and y = 1.25. The optimal value of the objective function is 23.75.

Let's solve this problem using the JuMP library Julia for optimization.

using JuMP, GLPK

# Setting the model

m = Model(); # m will mean "model"

# Let's choose an optimizer

set_optimizer(m, GLPK.Optimizer); # let's choose the GLPK solver (GNU Linear Programming Kit)

# Define

@variable(m, x>=0);

@variable(m, y>=0);

# Define the restrictions

@constraint(m, x+y<=5);

@constraint(m, 10x+6y<=45);

# Let's define the objective function

@objective(m, Max, 5x+4y);

# Let's launch the solver

optimize!(m);

# Conclusion

println(objective_value(m)) # The value of the objective function at the optimal point

println("x = ", value.(x), "\n","y = ",value.(y)) # Optimal x and y coordinates