Background noise reduction system

In this example, we will consider two models for muting background noise in an audio signal by applying a gain factor.

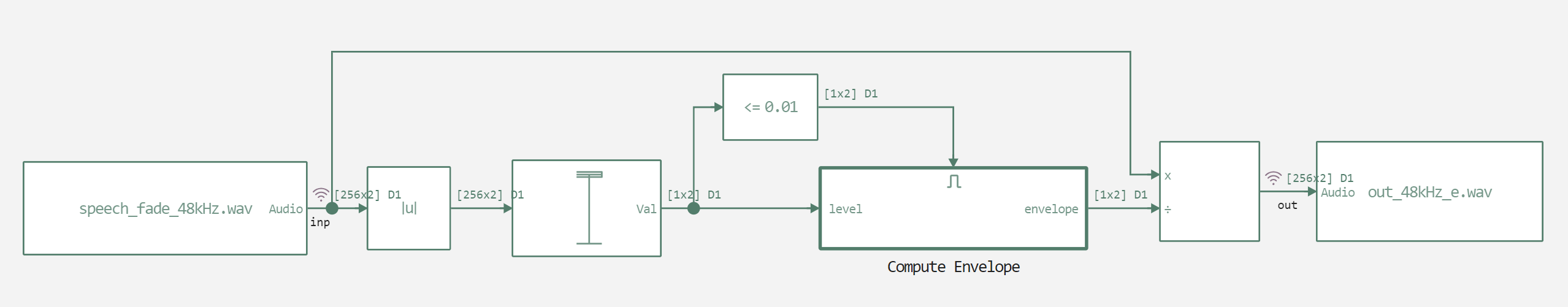

The figure below shows the interference smoothing subsystem. In the first case, the subsystem operates in pipeline mode. In the second case, it is triggered only when the threshold value of the input signal is reached, that is, when the input energy is low.

Next, we will announce auxiliary functions for launching models and playing audio files.

Pkg.add(["WAV"])

# Enabling the auxiliary model launch function.

function run_model( name_model)

Path = (@__DIR__) * "/" * name_model * ".engee"

if name_model in [m.name for m in engee.get_all_models()] # Checking the condition for loading a model into the kernel

model = engee.open( name_model ) # Open the model

model_output = engee.run( model, verbose=true ); # Launch the model

else

model = engee.load( Path, force=true ) # Upload a model

model_output = engee.run( model, verbose=true ); # Launch the model

engee.close( name_model, force=true ); # Close the model

end

sleep(5)

return model_output

end

using WAV;

using .EngeeDSP;

function audioplayer(patch, fs, Samples_per_audio_channel);

s = vcat((EngeeDSP.step(load_audio(), patch, Samples_per_audio_channel))...);

buf = IOBuffer();

wavwrite(s, buf; Fs=fs);

data = base64encode(unsafe_string(pointer(buf.data), buf.size));

display("text/html", """<audio controls="controls" {autoplay}>

<source src="data:audio/wav;base64,$data" type="audio/wav" />

Your browser does not support the audio element.

</audio>""");

return s

end

After declaring the functions, we will start executing the models.

Let's run the pipeline implementation model first.

.png)

run_model("agc_sub") # Launching the model.

Now let's run the model with the subsystem being turned on.

.png)

run_model("agc_enabled") # Launching the model.

Now let's analyze the recorded WAV files and compare them with the original audio track. First of all, we'll listen to the results.

inp = audioplayer("$(@__DIR__)/speech_fade_48kHz.wav", 48000, 256);

out_s = audioplayer("$(@__DIR__)/out_48kHz_s.wav", 48000, 256);

out_e = audioplayer("$(@__DIR__)/out_48kHz_e.wav", 48000, 256);

Conclusion

After listening to these audio tracks, we can see that the sound during pipelining is more uniform and has less distortion than when using the included subsystem. This is due to the fact that the feedback loop in one case processes each clock cycle, and in the other – only at the time of the presence of a control signal. The Delay block inside the Compute Envelope

The subsystem has an initial state of zero. You can experiment with larger values

of the initial state, and this will affect the degree of distortion of the audio track.